A Comprehensive Guide to Caching Strategies in Web API Development

Boosting the performance of your Web API is vital for delivering fast and efficient experiences to your users. Learn the fundamentals, discover different caching strategies, and uncover best practices to optimize your API's performance.

Welcome to another exciting chapter in this series on Web API Development! If you've ever wondered how to make your API lightning-fast and more efficient, caching is one of those steps to take in the right direction to achieve it. In the last chapter, I showed you how to implement HATEOAS in your Web API, it had a great impact when I shared it online as many experienced developers shared their experiences with this component since it has some pros and cons as with everything in life but if you want to take a look at it I will leave the link to the post below. Ok so without further ado let's get into today's post.

What is Caching ?

Caching is like having secret storage where you can temporarily store frequently accessed data. Instead of going through the whole process of generating a response from scratch every time, you can retrieve it directly from the cache. It's like having a shortcut to lightning-fast responses!

In the context of Web API, caching involves storing API responses, either partially or in their entirety, so that subsequent requests for the same data can be served directly from the cache. This reduces the need for expensive computations or database queries, resulting in improved response times and better performance.

Benefits of Caching

Caching offers several compelling benefits for your Web API:

1. Lightning-Fast Response Times

By serving responses from the cache, you can skip the time-consuming steps of processing and generating a fresh response. This significantly reduces the overall response time, leading to a smoother and more responsive user experience.

2. Reduced Server Load

Caching helps offload the work from your server by minimizing the number of requests that need to hit your API endpoints. When a request can be fulfilled from the cache, it eliminates the need for processing and querying the underlying data source. This, in turn, reduces the server load, allowing it to handle more concurrent requests and improving the overall scalability of your API.

3. Improved Scalability

With caching in place, your Web API becomes more scalable. By reducing the load on your server, caching enables your application to handle increased traffic and accommodate a larger user base without compromising performance. It helps you make the most of your server's resources and ensure a consistent experience for your users, even during peak usage periods.

Types of Caching

There are different types of caching that you can implement in your Web API:

1. In-Memory Caching

In-memory caching stores the cached data in the memory of your application. It offers lightning-fast access to the cached data but is limited to a single server instance. This type of caching is suitable for scenarios where the cached data is not critical and can be easily recreated if lost.

2. Distributed Caching

Distributed caching involves storing the cached data in a shared cache that can be accessed by multiple server instances or even across different machines. This allows for better scalability and resilience, as multiple servers can share the same cache. Distributed caching is commonly used in scenarios where high availability and reliability are required.

3. Client-Side Caching

Client-side caching involves storing the cached data on the client side, typically in the web browser. This is achieved by setting appropriate caching headers in the API responses. Client-side caching can significantly reduce the number of requests made to the server, as the client can use the cached data for subsequent requests. It improves the overall performance and responsiveness of your API.

What is Response Caching?

Response caching is a technique that allows you to store the response of an API endpoint and serve it directly from the cache for subsequent requests. Instead of re-computing the response or hitting the database every time, you can leverage response caching to retrieve the data from a cache store. This significantly improves response times, reduces server load, and enhances the overall scalability of your Web API. This is the technique we will be using in this tutorial.

The Response Cache Attribute

In ASP.NET Core Web API, response caching is made easy with the [ResponseCache] attribute. By applying this attribute to your controller or action methods, you can control how the response is cached. The [ResponseCache] attribute provides various options to customize caching behavior, such as cache duration, location, and more.

For example, you can specify the cache duration using the Duration property, like this:

[HttpGet]

[ResponseCache(Duration = 60)] // Cache the response for 60 seconds

public IActionResult Get()

{

// Your code here

}

The Response Cache Parameters

In ASP.NET Core, the [ResponseCache] attribute gives you different options to control how your API responses are cached. Let's take a look at these options in a beginner-friendly way:

- Duration: This parameter lets you specify how long the response should be cached. You can set it to a specific number of seconds using the

Durationproperty. For example, if you set[ResponseCache(Duration = 60)], the response will be cached for 60 seconds before it needs to be refreshed. - Location: This parameter determines where the response can be cached. You have three options:

ResponseCacheLocation.Any: The response can be cached by both client-side browsers and proxy servers.ResponseCacheLocation.Client: The response can be cached by client-side browsers only.ResponseCacheLocation.None: The response should not be cached at all.

- VaryByHeader: Sometimes, you want to cache responses separately based on specific request headers. This parameter allows you to specify the headers that should be considered when deciding if a cached response can be served. For example, if you set

[ResponseCache(VaryByHeader = "User-Agent")], the response will be cached separately for different user agents. - VaryByQueryKeys: Similar to

VaryByHeader, this parameter helps you cache responses separately based on specific query string parameters. You can specify the query parameters that should be considered when deciding if a cached response can be served. For example, if you set[ResponseCache(VaryByQueryKeys = new[] { "page", "pageSize" })], the response will be cached separately for different values of thepageandpageSizequery parameters. - NoStore: This parameter determines whether the response should be stored in the cache at all. Setting

NoStoretotrueensures that the response is not cached.

These response cache parameters give you control over how your API responses are cached. By using them, you can specify the caching duration, where the response can be cached, and even cache responses differently based on headers or query parameters. It's a powerful way to optimize your API's performance and reduce server load.

Response Caching Middleware

ASP NET Core also provides a built-in response caching middleware that you can add to your API pipeline. The response caching middleware intercepts incoming requests and checks if a cached response exists. If a cached response is found, it's served directly, bypassing the entire request pipeline. This saves processing time and improves the API's responsiveness.

To enable response caching middleware, you can add the following code to your Program.cs file:

builder.Services.AddControllers();

builder.Services.AddResponseCaching();

...

app.MapControllers();

app.UseResponseCaching();

app.Run();

Cache Profiles

Cache profiles allow you to define caching behavior in a centralized and reusable manner. Instead of repeating the same caching settings for multiple actions, you can define a cache profile and apply it across different endpoints. This promotes consistency and simplifies cache management.

To configure cache profiles, you can add the following code to your Program.cs file:

builder.Services.AddControllers(options =>

{

options.CacheProfiles.Add("DefaultCache",

new CacheProfile()

{

Duration = 60,

Location = ResponseCacheLocation.Any,

VaryByQueryKeys = new[] { "page", "pageSize" }

// Vary the cache by these query parameters

});

});

In the code snippet above, we've defined a cache profile named "DefaultCache" It has a duration of 60 seconds, meaning that the response will be cached for that period. We've also allowed caching from any location, which means both client-side browsers and proxy servers can cache the response. Additionally, we've specified that the cache should vary based on the "page" and "pageSize" query parameters. Feel free to adjust these settings based on your specific needs.

Once the cache profile is defined, you can apply it to your API endpoints. Let's say you have a controller with an action method you want to cache. Simply add the [ResponseCache] attribute to that action method and specify the cache profile name:

[HttpGet]

[ResponseCache(CacheProfileName = "DefaultCache")]

public IActionResult GetData(int page, int pageSize)

{

// Your code here

}

By setting CacheProfileName to "DefaultCache," you're instructing ASP.NET Core to apply the caching settings defined in the "DefaultCache" cache profile to this specific action method. You can reuse the same cache profile across multiple endpoints to maintain consistent caching behavior.

Using cache profiles in ASP.NET Core 7 simplifies the management of caching settings, making it easier to update or modify caching behavior in the future. It centralizes your caching rules, improving code readability and maintainability.

Defining Cache Profiles in the appsettings.json file

To define a cache profile in the appsettings.json file and use it in your ASP.NET Core application, follow these steps:

- Open your

appsettings.jsonfile and add a new section for cache profiles. Here's an example of how it might look:

{

"CacheProfiles": {

"DefaultCache": {

"Duration": 60,

"Location": "Any",

"VaryByQueryKeys": [ "page", "pageSize" ]

},

"CustomProfile": {

"Duration": 120,

"Location": "Client",

"VaryByHeader": [ "User-Agent" ]

}

}

}

In your Program.cs file, register your cache profiles defined in the appsettings.json file as shown below:

builder.Services.AddControllers(options =>

{

var cacheProfiles = builder.Configuration

.GetSection("CacheProfiles")

.GetChildren();

foreach (var cacheProfile in cacheProfiles)

{

options.CacheProfiles

.Add(cacheProfile.Key,

cacheProfile.Get<CacheProfile>());

}

});

Now, you can use the cache profiles in your API endpoints. For example, let's assume you have an action method in your controller that you want to cache using the "DefaultCache" cache profile. Add the [ResponseCache] attribute and specify the cache profile name:

[HttpGet]

[ResponseCache(CacheProfileName = "DefaultCache")]

public IActionResult GetData(int page, int pageSize)

{

// Your code here

}

- By setting

CacheProfileNameto "DefaultCache," the caching settings defined in the "DefaultCache" cache profile will be applied to this action method.

That's it! You've defined cache profiles in the appsettings.json file and used them in your ASP.NET Core application. This approach allows you to configure cache profiles externally, making it easier to modify caching behavior without modifying code.

Get the code repo from GitHub

If you want to follow along get the code from GitHub and make sure you get the code from the HATEOAS branch which contains the code changes up to this tutorial.

Implementing Response Caching in your Web API

First, add the response caching in the services container and the response cache middleware so the code in the Program file should look like this:

builder.Services.AddResponseCaching();

builder.Services.AddControllers()

.AddNewtonsoftJson(options =>

{

options.SerializerSettings.ReferenceLoopHandling = ReferenceLoopHandling.Ignore;

options.SerializerSettings.NullValueHandling = NullValueHandling.Ignore;

});

...

app.UseResponseCaching();

app.MapControllers();

app.Run();

You can add the response caching above or below the controllers and the response caching should be above the MapControllers().

Add a Response Cache attribute to the Get Endpoints

[HttpGet]

[ResponseCache(Duration = 60, Location = ResponseCacheLocation.Any, VaryByQueryKeys = new []{"*"})]

public async Task<IActionResult> GetPost([FromQuery] QueryParameters parameters)

{

...

}

[HttpGet("{id:int}")]

[PostExists]

[ResponseCache(Duration = 60, VaryByQueryKeys = new []{"*"})]

public async Task<IActionResult> GetPost(int id)

{

...

}

For the GetPost endpoint, a duration of 60 seconds is set for the response lifetime, the location for the response cache is set to any, which means that both the server and the client will cache the response and lastly, there is a VaryByQuerykeys parameter that we set to update the cache every time that a query string is changed as this method uses pagination, sorting and filtering there will be many cases where the query strings change.

For the GetPost(id) method a duration of 60 seconds is set as well and the VaryByQueryKeys parameter is initialized as we did for the GetPost method.

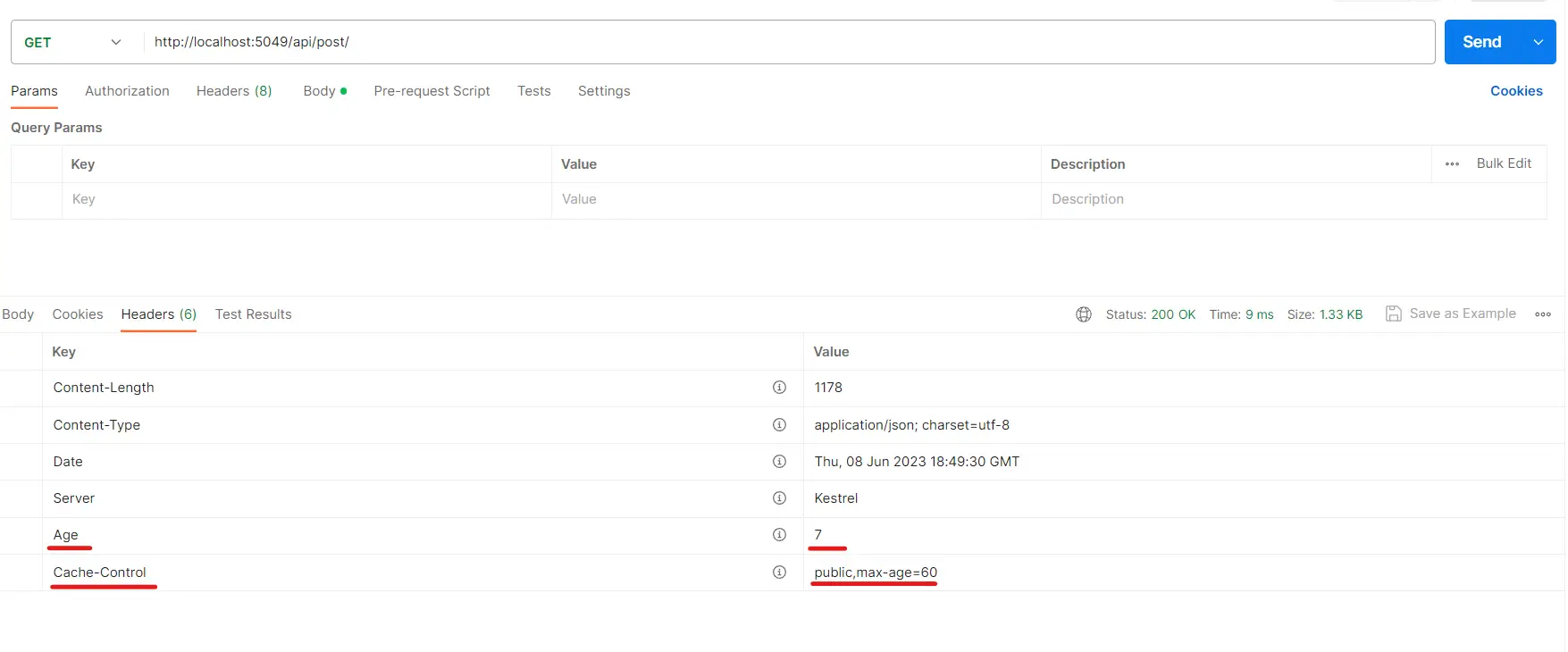

Testing your application

Now you are ready to test your application, using a tool like Postman make a call to any of these endpoints and check the response headers, there you will see the Cache-Control header which contains the max-age value that indicates how long the cached response will last in this case it is 60 seconds for any of the endpoints. If this is the first time you call the endpoint you will only be able to see the Cache-Control header but if you call the same endpoint again there will be another header named Age this header contains the age in seconds of the cached response as you are getting the same response if you did not change any query string parameter then you should be getting a cached response this means a previously stored response to save some resources on your application.

Conclusion

Caching is a powerful technique that can greatly enhance the performance and scalability of your Web API. By leveraging caching, you can achieve lightning-fast response times, reduce server load, and improve the overall user experience. Whether you choose in-memory caching, distributed caching, or client-side caching depends on your specific requirements and the nature of your application.

So, don't wait any longer! Implement caching in your Web API and witness the transformation in performance. Your users will thank you for the lightning-fast responses and smooth user experience.

Happy caching!

Share this article with our brand-new sharing buttons!

This week I worked on some stuff in the blog for example now you can share this article on your favorite social media platform. Also as we achieved more than 10K views this month I’m planning on the future of this blog as well with the YouTube channel I want to start more series and create resources that help you on your learning Journey but I will talk about that later. Thanks for everything I hope you have an amazing day!